ALEXA, HELP ME BE A BETTER HUMAN: Redesigning Conversational Artificial Intelligence for Emotional Connection

Evie Cheung’s thesis, Alexa, Help Me Be A Better Human: Redesigning Conversational Artificial Intelligence for Emotional Connection, interrogates the status quo of the artificial intelligence (AI) space, and suggests pathways to use intersectional thinking to imagine new applications for the technology. Her thesis intends to bridge the ever-widening gap between technology and humanity, but also imagines a more “human” future for artificial intelligence within the realm of psychology. By approaching these issues from Evie’s point of a view as a woman of color, her thesis offers a counter perspective to technology’s dominant narrative.

Participants thought that Alexa was a subservient white woman who couldn’t think for herself, apologized for everything, and was pushing a libertarian agenda.

“I ask the question of ‘What if?’ as a cautionary interrogation, but ultimately as a hopeful provocation,” Evie shares. “I believe that we have designed the messy world we occupy now, but we are also more than capable of designing a more humane reality—and we can use AI to do it.” To explore this, Evie co-opts a specific type of AI called “conversational AI,” which uses the machine learning technique of natural language processing (NLP). (NLP is what allows humans and machines to talk with each other; it’s the underlying technology that powers products like Siri, Alexa, and chatbots.)

Co-Creation Workshop

As a part of her research, Evie conducted a co-creation workshop with thirteen cross-industry professionals. During the workshop, participants explored the embedded values of AI-powered products and services, using Amazon’s Alexa as a case study: they listened to Alexa’s voice telling a story and were instructed to draw what Alexa would look like as a human being. They were then asked questions about Alexa’s race, political beliefs, and hobbies.

Participants thought that Alexa was a subservient white woman who couldn’t think for herself, apologized for everything, and was pushing a libertarian agenda. “In a vacuum, this is hilarious,” says Evie. “But imagine if a child is growing up with this technology; how would it affect their formation of a mental model on how they perceive female-sounding voices?”

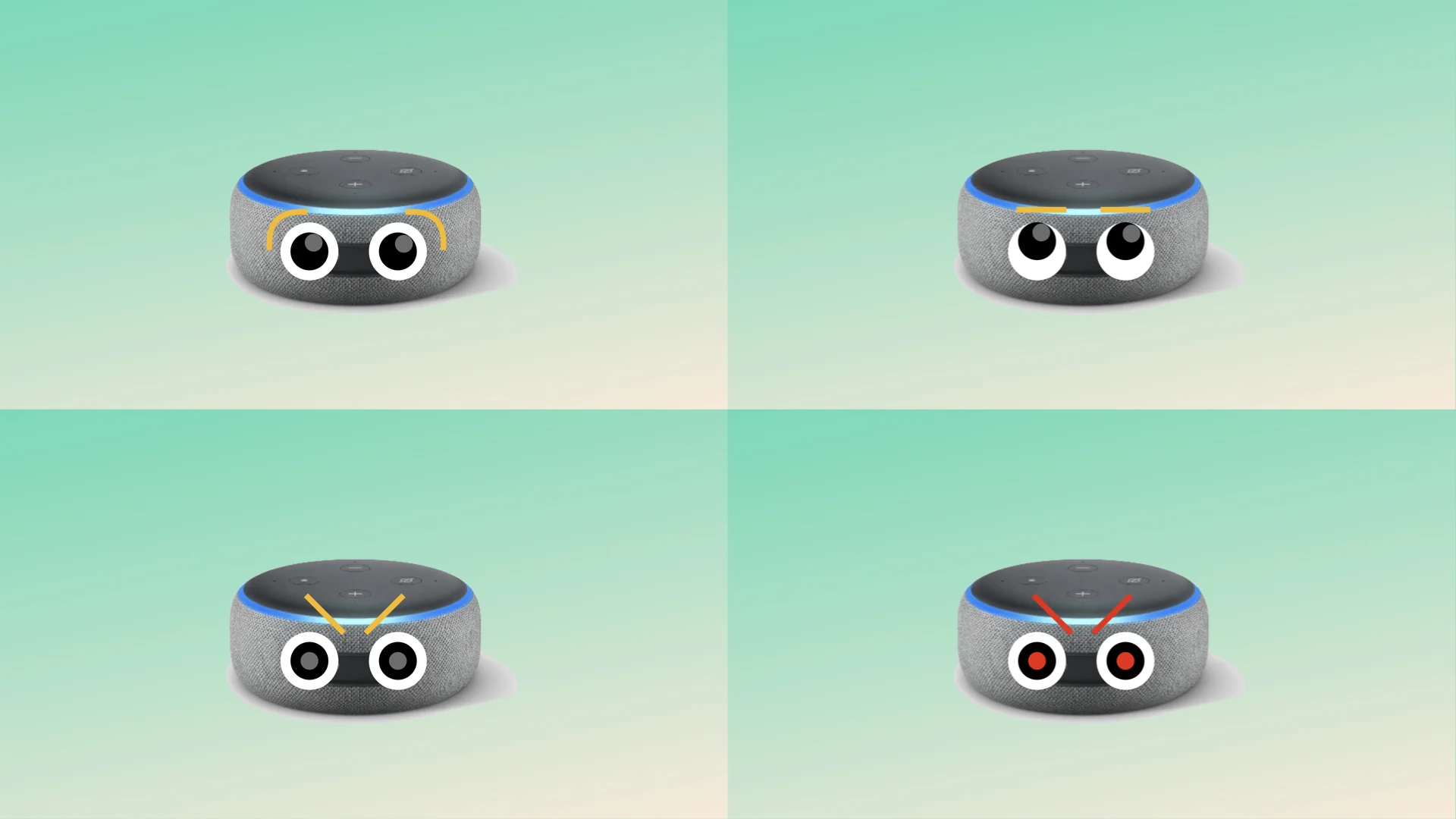

Rookee: A More Emotional AI (Literally)

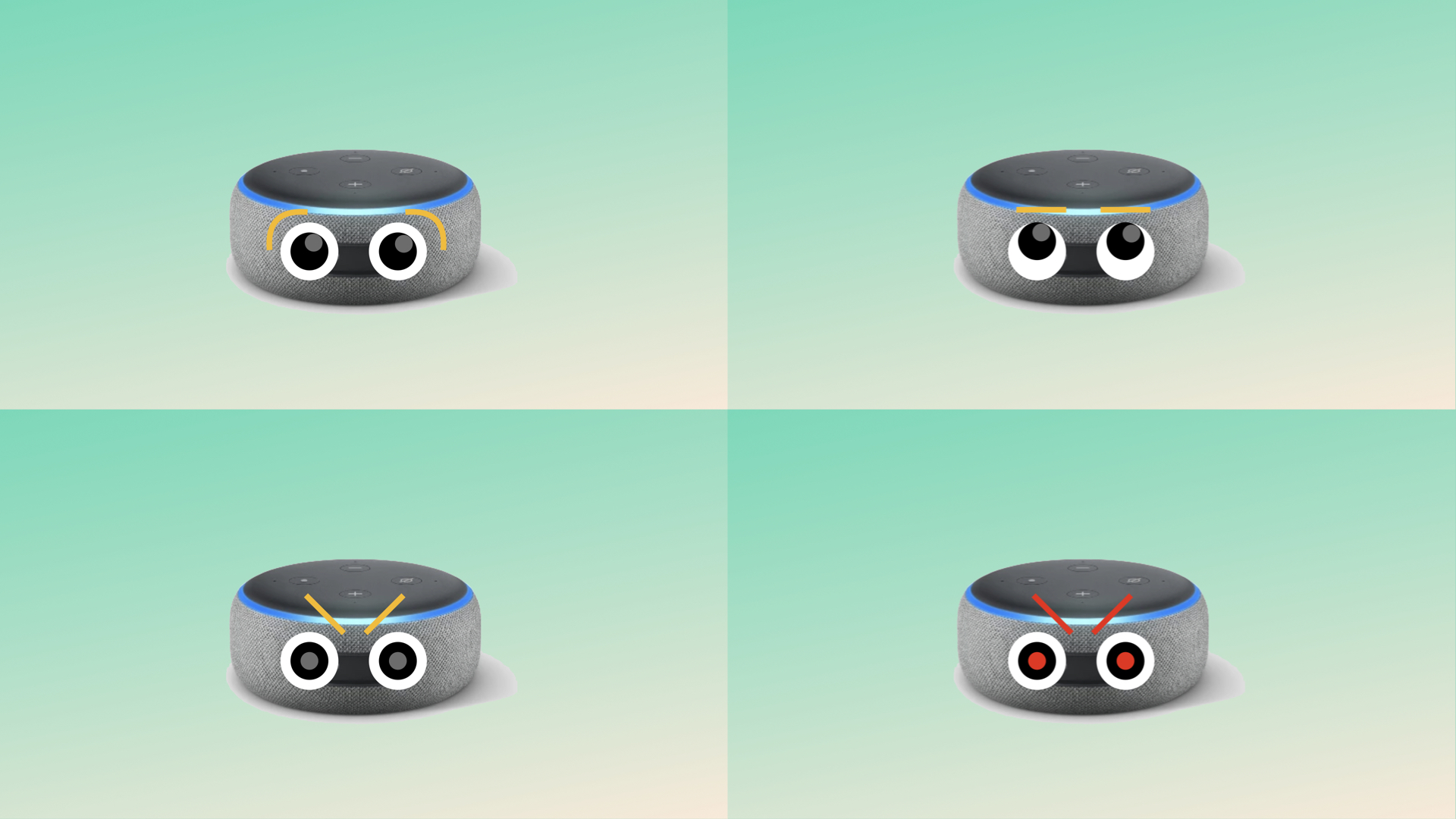

Evie explored humanizing Alexa by adding bluetooth googly eyes to an Echo device that would react emotionally to a child’s commands. If they are rude to it, the device locks until the child apologizes. This adds a level of interaction and accountability that transforms Alexa’s personality to be responsive rather than servile, counteracting the bias that the female voice, or women, should be obedient.

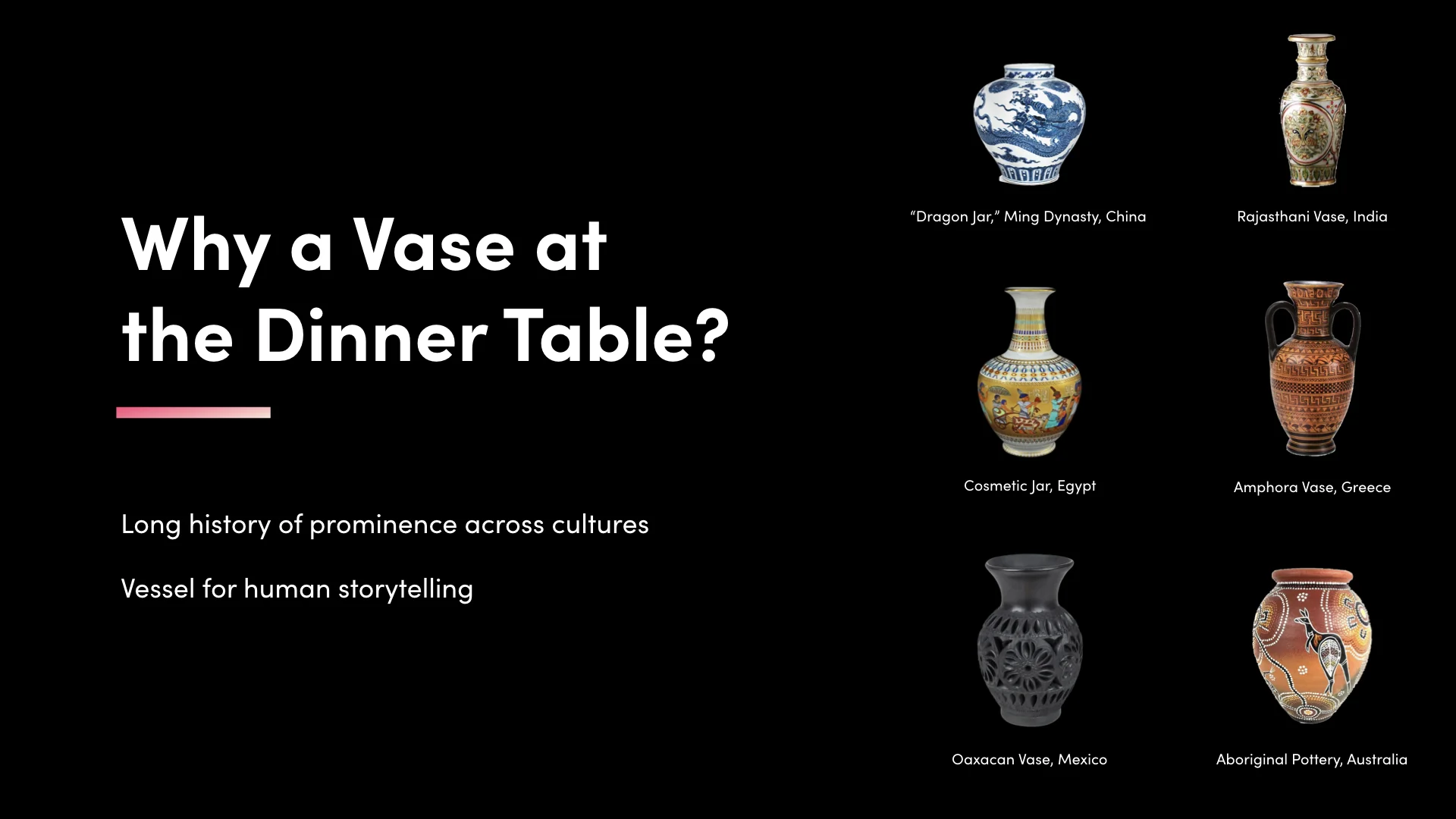

Affie: AI As Part of the Family

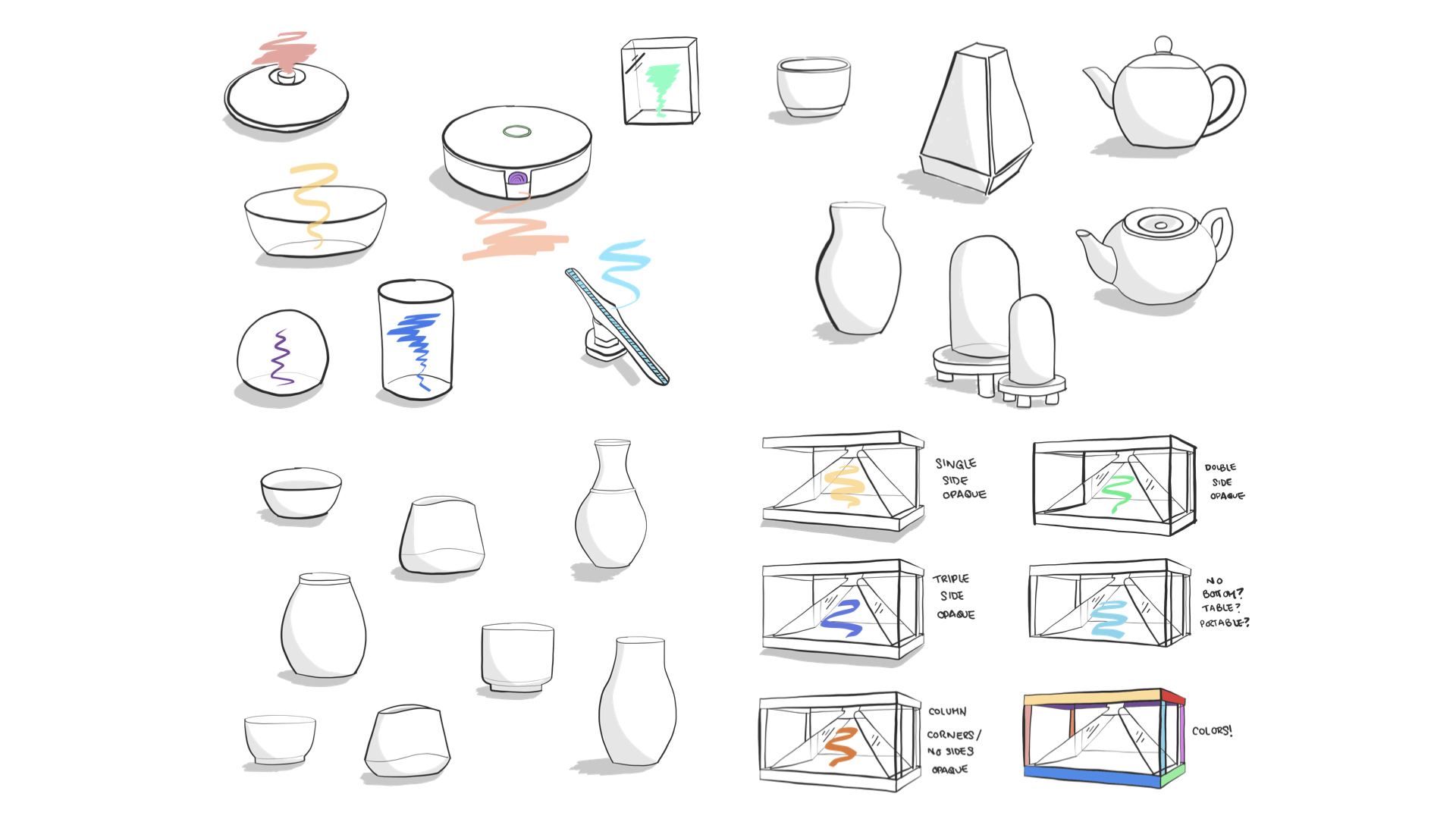

Affie is a smart speaker disguised as a vase, for families who may have trouble with communication due to cultural barriers. Affie was inspired by Evie’s own experience having trouble communicating with her parents while growing up as a first-generation Asian American.

Affie sits as a centerpiece in the middle of a family meal. Unlike traditional smart speakers, Affie is not always listening, does not “learn”, and does not store data. In fact, it doesn’t even have its own voice—instead mimicking its users’ specific voices by recreating them from voice samples they upload through the app. In the app, users can write their thoughts and send them to the device; then, at the beginning of the meal, a user can push “start” to begin playing the pre-programmed thoughts, told in the users’ own voices.

Affie’s power is intentionally limited—it’s not designed to be a solution for unstable family dynamics, but as a vehicle through which everyone has the potential to have a “voice” to express themselves at the dinner table.

Sigma: AI as Your Friend

Sigma is an open source mental health community for depression and anxiety, which uses natural language processing to spotlight negative thought patterns in the user’s thinking, build useful analysis over time, and offer resources to help users address their identified challenges. To emphasize that AI has its limits, the app also includes an empathetic human component in the form of an open-source community.

Here’s how Sigma works: first, you enter your thoughts into the app, or “check in with yourself”, through speaking or writing. As Sigma is processing the thought, you’re instructed to label your thought as either “thinking” or “feeling,” and if it’s “positive” or “negative”, a labeling practice used in cognitive behavioral therapy. Then, using NLP, Sigma highlights specific words that convey “black and white” thinking, a detrimental thought pattern often found in depression and anxiety. Sigma then replaces those words for you, giving your brain a new cognitive cue to begin thinking differently.

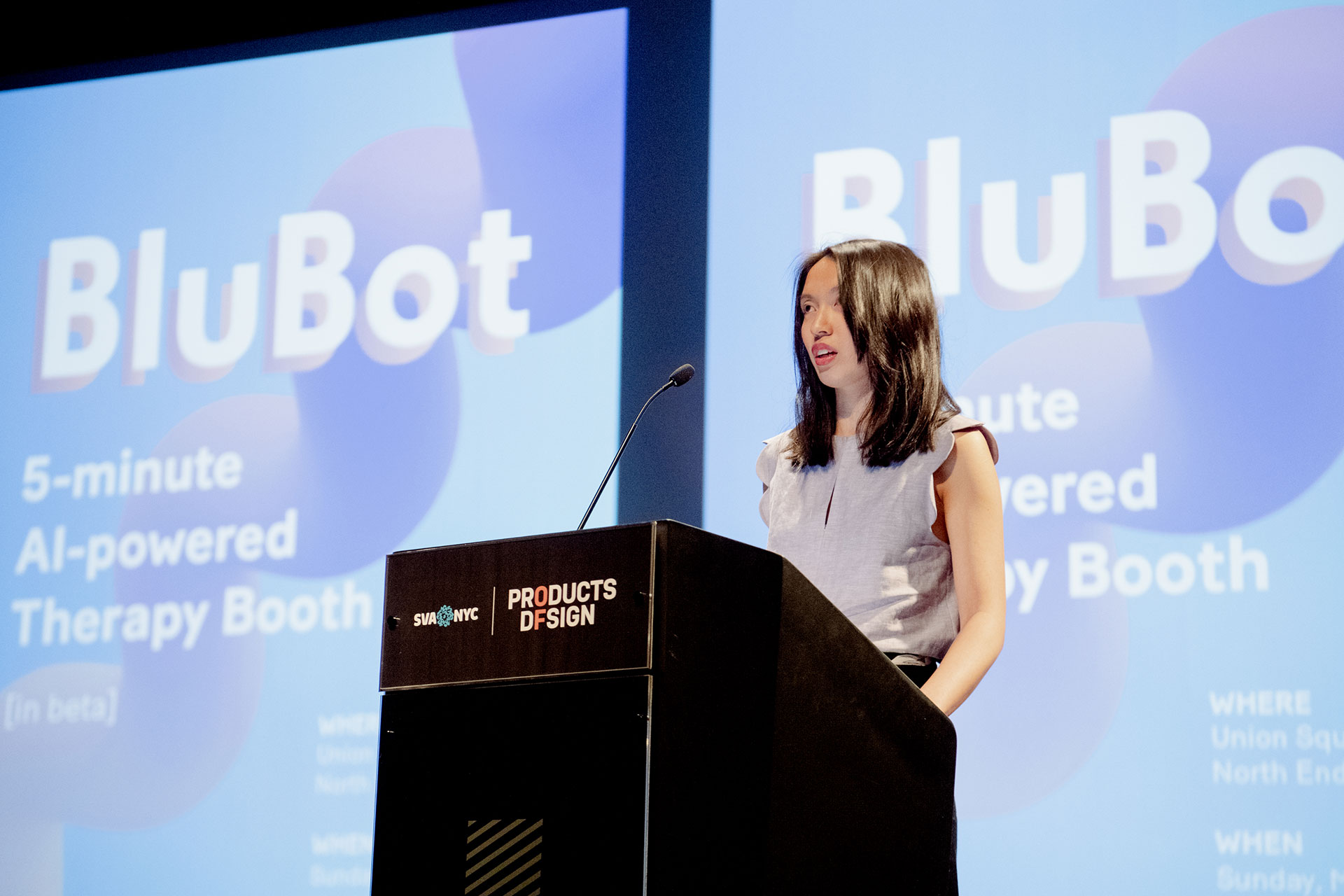

BluBot: AI as Your Therapist

BluBot was a popup AI therapy booth that Evie set up in New York City’s Union Square Park. In two hours, BluBot, a conversational AI therapist in training who lives inside the BluBot therapy booth, engaged twenty participants in five-minute AI therapy sessions, in which they trusted BluBot enough to share personal stories about mental health issues, family conflict, and drug addiction. BluBot was Evie’s way of envisioning more accessible forms of therapy, especially for marginalized groups, and exploring whether AI could mitigate human judgment. The BluBot booth was emphatically public, to help fight mental health stigma.

Some of the questions that BluBot asked participants were:

I’m trying to learn more about something called emotions. My creator told me that humans are very emotional. Can you tell me what an emotion is?

Can you tell me about a time when you felt happy / sad / angry?

Another human told me that humans often go to therapy to talk about their problems. What is one of your problems?

To learn more about Evie Cheung’s work and see what she’s currently up to, visit www.eviecheung.com. She’s always excited to grab coffee with interesting people; send her a note at hello@eviecheung.com.