Deafscape and Hearingscape: Communication Beyond Spoken Language

In his thesis Deafscape and Hearingscape: Communication Beyond Spoken Language, Yuancong Jing reframes communication design by focusing not on "fixing" Deaf experiences to fit hearing norms, but on creating shared spaces where both communities can thrive equally. Through interviews with CODAs (Children of Deaf Adults), DHPs (Deaf Children of Hearing Parents), sign language interpreters, and special education experts, he uncovered a critical pain point: the imbalance of language proficiency within families.

“Sometimes I feel like I’m living in the same house, but not at the same moment.”

— A CODA reflecting on growing up in a Deaf-Hearing household

This imbalance of language proficiency within families often leads to emotional disconnection despite technological access. Realizing that many current technologies attempt to adapt Deaf individuals to hearing-centric systems, Yuancong chose instead to design for mutual adaptation. He describes this adaptation as a “two-way bridge where communication feels natural and effortless, like air moving through a shared room.”

Throughout his research and design work, Yuancong embraced the concept of Deaf Gain—the idea that Deafness is not a disability to be overcome, but instead, a unique sensory and cognitive experience that enriches human understanding. His thesis invites us to imagine a world where communication is defined by the full spectrum of human expression and beyond sound alone.

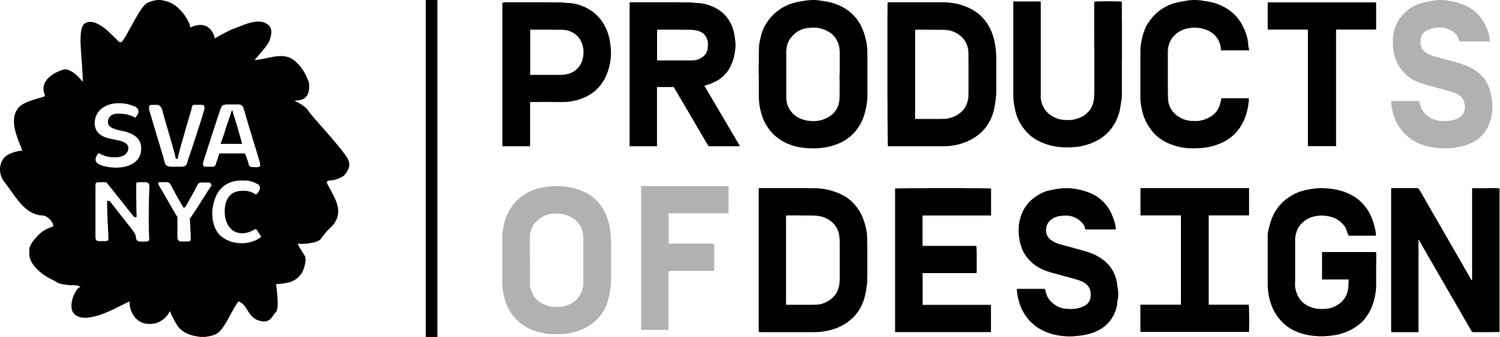

SignSight: A Modular Camera–Projector System

SignSight is a modular smart-home device for mixed language families that makes Deaf-hearing households feel more connected. Combining a rotatable MediaPipe-powered camera and a projector, SignSight translates sign language into visual subtitles and visualizes everyday sounds as light cues. Whether it’s someone signing in the kitchen or the sound of running water, SignSight brings all household members into the same shared moment—no apps or handoffs needed.

Designed specifically for CODA (Children of Deaf Adults) and DHP (Deaf children of Hearing Parents), SignSight addresses the gaps in day-to-day communication that often lead to emotional distance. It recognizes that while family members may live under the same roof, they can still feel worlds apart when their primary languages don’t align.

The system’s strength lies in its ambient, always-on approach. Rather than demanding a deliberate interaction—opening an app, pressing a button—SignSight works in the background, offering seamless communication support as part of daily life. The camera translates signed language into spatial subtitles projected onto shared surfaces like tables or walls, while the projector also provides visual alerts for environmental sounds like doors opening or water running.

This shift toward spatialized, shared awareness transforms the home into a more inclusive space. It allows family members to stay attuned to one another’s presence without shouting across rooms or relying on intermediaries. As one CODA put it, “Now we just text across the house. We live together, but we don’t really talk that much.” SignSight gently counters this with a quiet, embodied reminder: communication can be seen, felt, and shared—together, in real time.

SignSight Interactive Hub: Learning Sign Language as a Visual Grammar

SignSight Interactive Hub is an app that teaches sign language as a structured, three-dimensional language. Using pose recognition and MediaPipe motion tracking, the system breaks signs down into their components—handshape, movement, orientation, location, and facial expression—and offers real-time feedback as users practice. By showing sign language as a spatial, expressive whole, the Interactive Hub opens a visually native path to fluency.

“Sign language isn’t just about hands. It’s where, how, and who you’re signing to.

Without structure, it’s like learning English from a fridge magnet set.” — Yuyin Liang, Professor of Special Education

Unlike many sign language apps that offer isolated vocabulary—“apple,” “thank you,” “good morning”—SignSight emphasizes the underlying grammar that makes conversation possible. It treats sign language not as a set of hand motions, but as a rich linguistic system built in space and time.

The app’s feedback system is intuitive and precise. Learners can focus on individual components—how a hand moves, where it’s placed, what the face is doing—and see immediate visual corrections. This structured breakdown allows users to understand not just what to sign, but why it’s signed that way, bringing the kind of fluency that word-by-word learning can’t provide.

For CODAs, educators, and curious learners, SignSight lowers the barrier to meaningful communication. It bridges the frustrating gap between interest and fluency, offering a tool that respects the complexity of Deaf culture and language. More than just language acquisition, it fosters cultural empathy and invites hearing users to approach sign language on its own visual terms—not as a translation, but as a way of seeing and expressing the world.

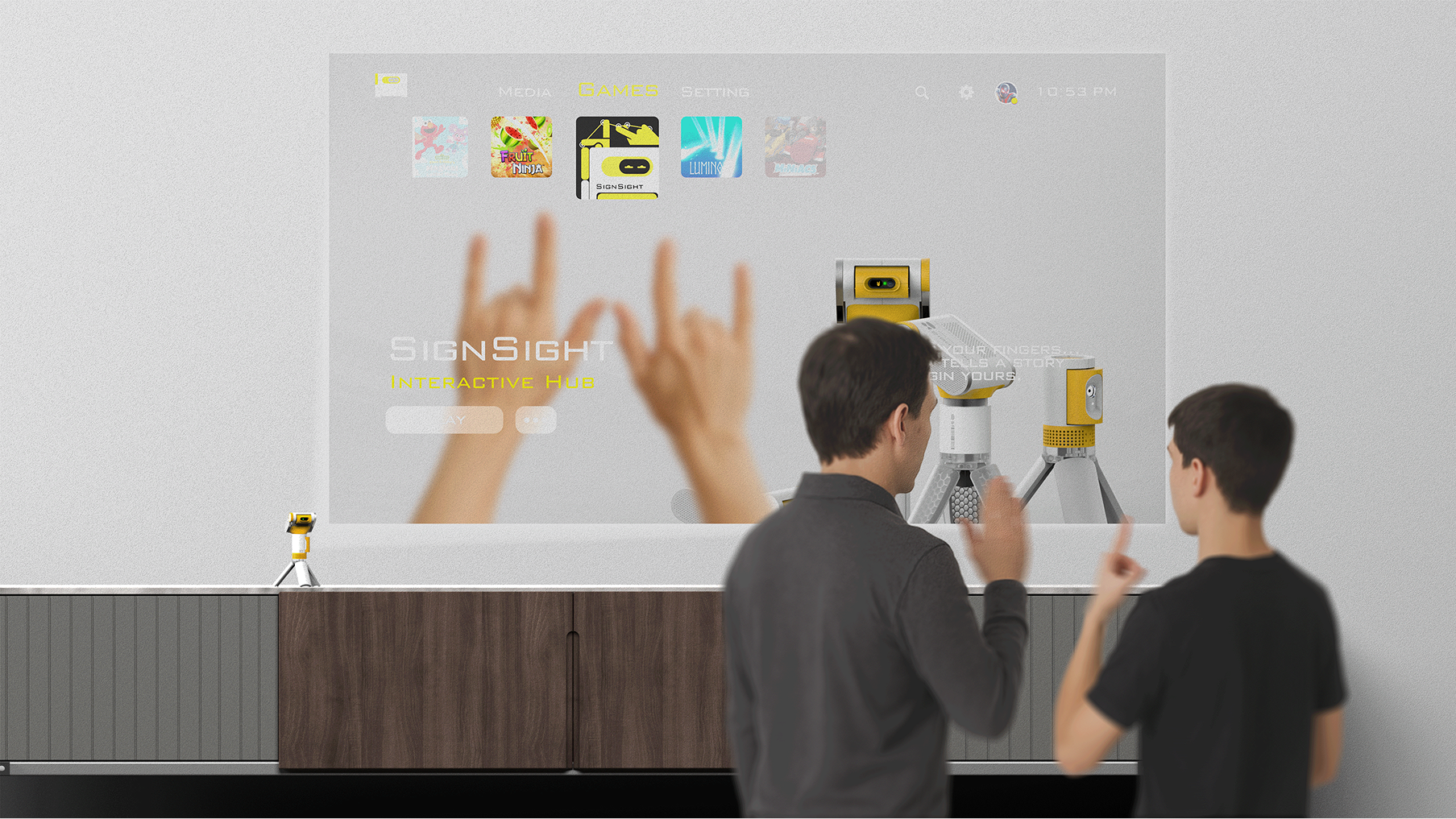

MOOD: Visualizing Emotional Tone Beyond Subtitles

MOOD is an app that gives Deaf people real-time emotional context alongside spoken subtitles. Using voice emotion recognition, it translates spoken tone into color-coded visual cues, dynamic rings that pulse with intensity, and an emotional map of the conversation. In tense or nuanced situations like job interviews or medical visits, MOOD lets Deaf users read what was said in addition to feeling the intent behind it.

For many Deaf individuals, captions have long provided access to words—but not to the emotional nuance that gives those words weight. Sarcasm, excitement, hesitation, or tenderness often vanish in translation, leaving behind flat text and frayed connections. As one Deaf sign language interpreter put it, “I can see the words. But I can’t feel their tone.”

MOOD addresses this disconnect with subtlety and precision. Rather than replacing captions, it layers them with a new dimension of meaning: emotional tone. The app analyzes voice patterns in real time—tracking shifts in energy, affect, and rhythm—and interprets them into intuitive visual elements that accompany the subtitles. A flash of yellow might signal joy. A pulsing red ring could indicate frustration. Together, these cues give Deaf users access to the emotional subtext that hearing people often take for granted.

The design avoids theatrics in favor of clarity. Its interface remains clean and adaptable, supporting conversations that range from high-stakes negotiations to casual check-ins. Users can track how a speaker’s tone changes over time, helping them detect moments of discomfort, empathy, or urgency that might otherwise go unnoticed. MOOD doesn’t aim to simulate hearing. Instead, it reframes emotional access as a core component of communication equity. By making tone visible, it allows Deaf users to participate more fully in the emotional dynamics of spoken conversation—deepening mutual understanding, and helping bridge the gap between what is said and what is meant.

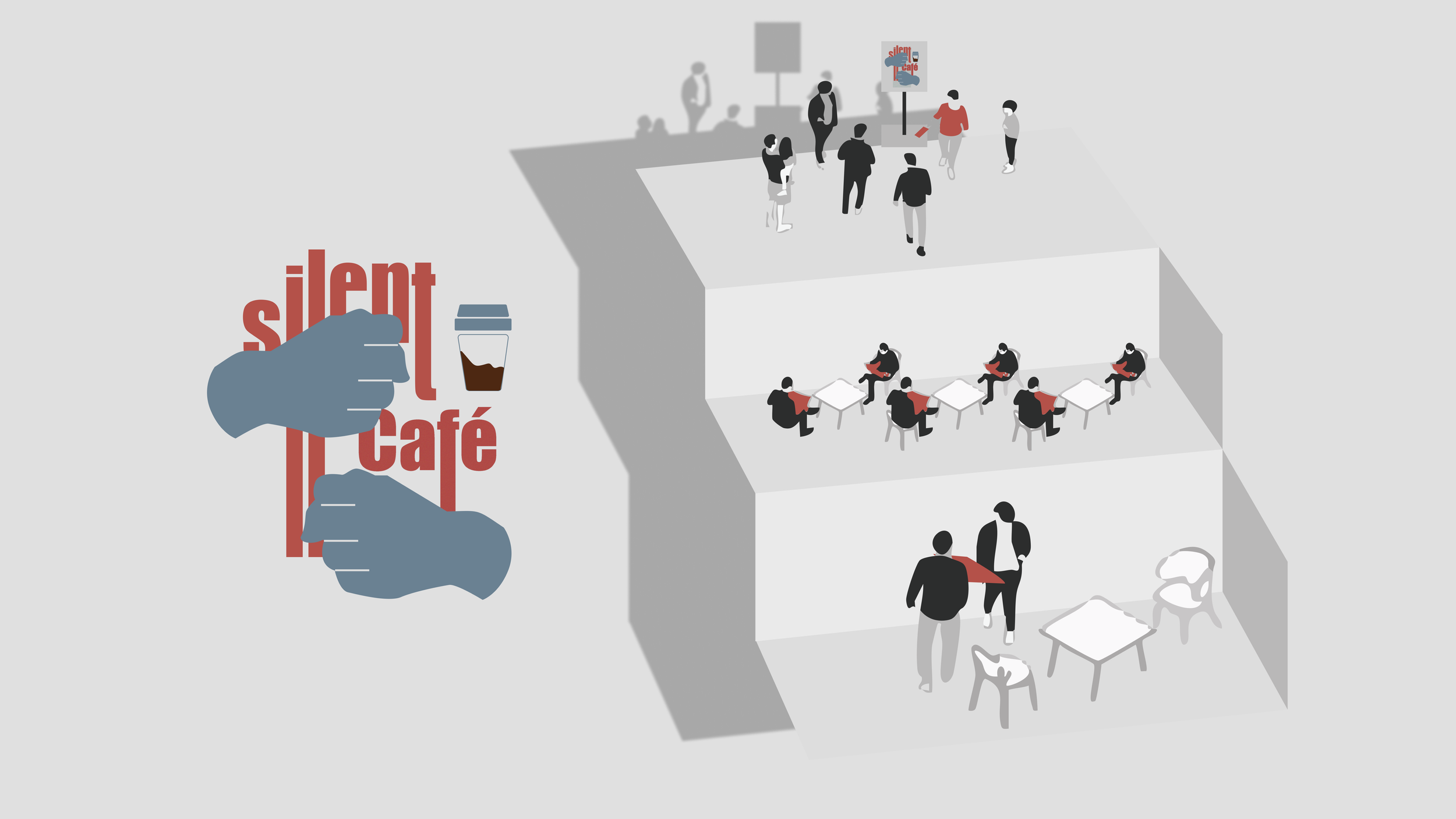

Silent Coffee Chat: An Immersive Experience of Nonverbal Connection

Silent Coffee Chat is an interactive, nonverbal experience designed for hearing people to appreciate the richness of communication without sound. Over a quiet coffee, participants work together on simple tasks using only gaze, gestures, and shared props, then reflect on what they learned. The experience reveals the depth and intimacy that can emerge when presence replaces speech, and reminds us that silence can be a language of its own.

The space is gently choreographed with visual prompts, gesture-based challenges, and shared objects that require cooperation and attentiveness. As participants move through a series of micro-interactions—greeting, storytelling, puzzle-solving—they come to rely on gaze, posture, facial expressions, and gesture. Without sound, presence deepens.

“This is not a simulation of Deaf experience,” Yuancong explains. “Instead, it’s an invitation to reframe silence as something meaningful. The absence of speech makes room for another kind of connection—more embodied, more attentive, and often more intimate.” As one participant reflected: “In the silence, I became more aware of the other person… I didn’t miss the sound. I felt more connected.” Silent Coffee doesn’t aim to teach sign language—it teaches something rarer: Respect for silence as communication.

For a deeper look into Yuancong’s thesis process, research, and reflections, explore the following links:

Thesis Repository on Notion – a comprehensive archive of Yuancong’’s thesis development

axisesdesign.webflow.io – Yuancong’s design website